The AI super-cycle has thrust semiconductors into the center of global strategy, turning companies like Nvidia, Broadcom, and AMD into household names. All three compete in overlapping arenas—GPUs, accelerators, networking, and custom silicon—but their paths forward don’t need to be confined to a zero-sum “red ocean” fight. Applying the Blue Ocean Strategy lens, we can see how each player has already sidestepped brutal head-to-head competition and where their next uncontested waters may lie.

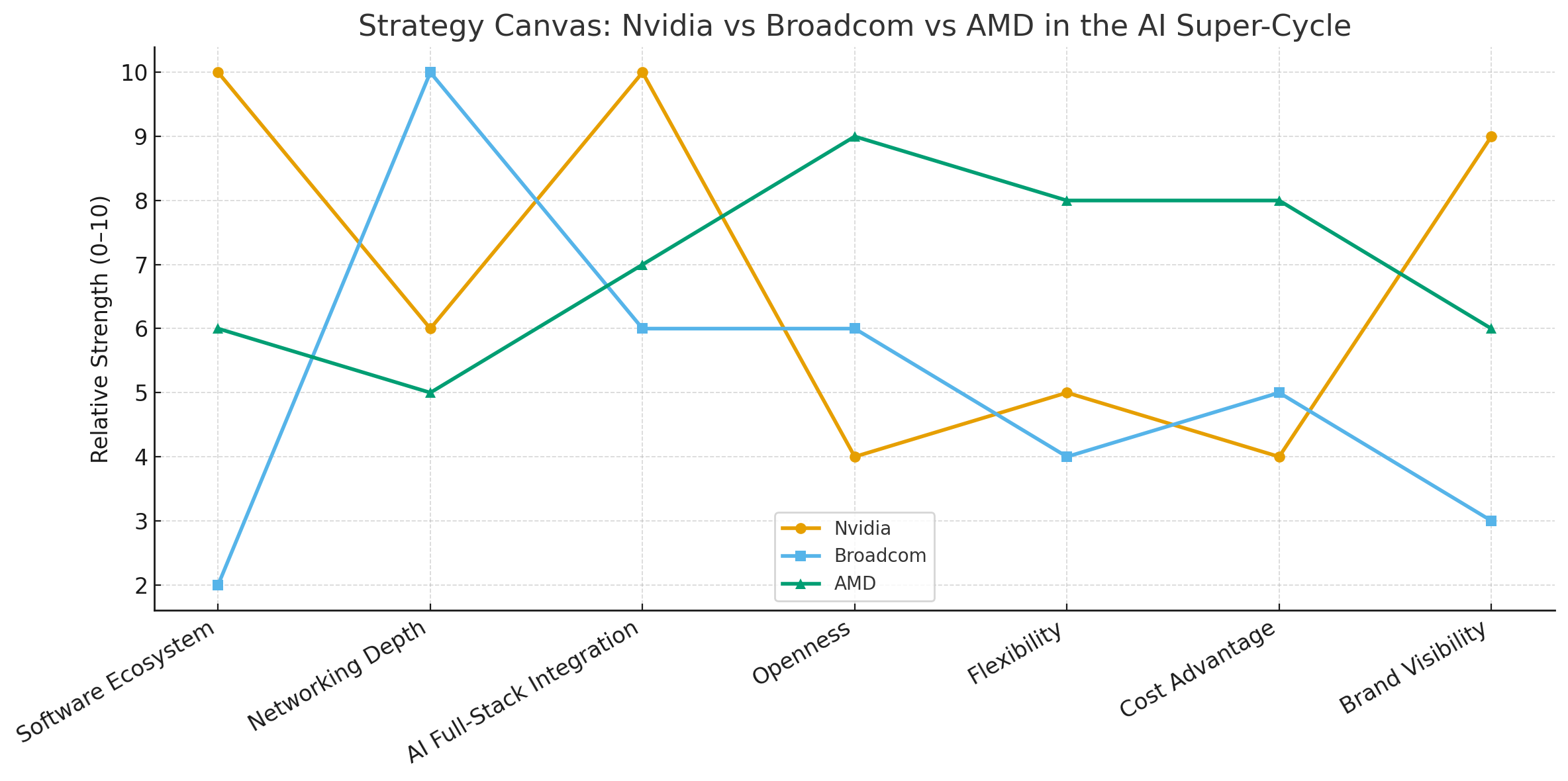

Here’s the strategy canvas comparing Nvidia, Broadcom, and AMD.

It shows how their strengths diverge: Nvidia dominates on software ecosystem and full-stack integration, Broadcom owns networking depth but has weak brand visibility, and AMD positions itself as the flexible, open, cost-friendly challenger. Each curve reflects a different blue ocean they’ve carved within the AI market.

Nvidia: Expanding Beyond Chips Into the AI Operating System

Nvidia’s genius blue ocean move was not just building faster GPUs, but creating CUDA and the software ecosystem that made them indispensable to developers. In the “red ocean” of pure silicon speed, AMD and Intel could theoretically compete. But in the “blue ocean” of being the operating system of AI, Nvidia became irreplaceable.

Eliminate: Dependency on CPU-centric compute; commoditized driver layers.

Reduce: Traditional chip design cycles—Nvidia accelerated iteration.

Raise: Investment in developer ecosystems, frameworks, and AI-optimized libraries.

Create: A full-stack AI platform (CUDA, cuDNN, TensorRT, DGX systems, Omniverse, NIM microservices).

Looking ahead, Nvidia’s next blue ocean is in agentic AI infrastructure. It is positioning itself not just as a chipmaker but as the backbone of AI agents—providing inference-optimized GPUs, networked AI factories, and cloud-native inference APIs. A second blue ocean is in sovereign AI stacks: offering countries turnkey AI platforms that bypass hyperscaler dependence. The risk is regulatory scrutiny and over-concentration, but Nvidia’s culture of building software moats around hardware remains its sharpest edge.

Broadcom: The Quiet Architect of AI Networks

Broadcom rarely gets the headlines Nvidia does, but its blue ocean play has been just as clever: rather than compete directly in GPUs, it owns the plumbing of the AI data center. Every hyperscaler building AI clusters runs on Broadcom’s networking chips, interconnects, and custom ASICs.

Eliminate: The need for hyperscalers to buy networking from many small vendors.

Reduce: Reliance on general-purpose CPUs for data movement.

Raise: Bandwidth, efficiency, and reliability of interconnects.

Create: A quasi-monopoly in AI networking (switches, SerDes, PCIe, optical).

Broadcom’s future blue oceans may emerge in AI-optimized networking fabrics (making interconnects as critical as GPUs in system design), quantum-era networking layers (where secure, ultra-low latency links become essential), and custom ASICs for hyperscalers (where it acts as the invisible foundry for Google, AWS, Meta). Its challenge is visibility—Broadcom isn’t a consumer brand—but its strength is the opposite of Nvidia’s: it thrives in being irreplaceable infrastructure few outsiders even think about.

AMD: The Challenger Seeking Its Own Blue Ocean

AMD has long played catch-up, but its survival has always depended on finding side doors. It undercut Intel in CPUs, then leveraged open standards like ROCm to position against Nvidia. Its MI300 accelerators show AMD is serious about AI—but if it simply mirrors Nvidia, it risks drowning in the red ocean of GPU wars.

Eliminate: Proprietary lock-in wherever possible; AMD leans on open ecosystems.

Reduce: Pricing complexity (offering hyperscalers more attractive economics).

Raise: Flexibility—AMD chips are often more customizable.

Create: A credible second source for AI accelerators, which regulators and hyperscalers need to keep Nvidia in check.

AMD’s prospective blue oceans lie in hybrid architectures (combining CPU, GPU, and memory coherently), edge AI accelerators (smaller, power-efficient GPUs for inference outside data centers), and custom co-design with hyperscalers (mirroring its semi-custom success with PlayStation/Xbox). If AMD doubles down on being the “open, flexible alternative” in AI hardware, it could cultivate a distinct demand curve—especially if governments and cloud providers grow nervous about Nvidia’s dominance.

Strategy Canvas: Diverging Curves in the AI Market

Nvidia plots high on software ecosystem, AI full-stack integration, and premium pricing, but lower on openness.

Broadcom scores highest on networking depth, low visibility in consumer mindshare, and is nearly absent in developer ecosystems.

AMD emphasizes cost flexibility, open standards, and co-design potential, but lags Nvidia on ecosystem lock-in.

Each diverges sharply from the “red ocean” of generic silicon vendors. Nvidia created an AI OS, Broadcom a networking backbone, AMD a flexible challenger role.

Future Blue Oceans: Probability-Weighted Outlook

Nvidia as AI OS & Agent Infrastructure (65% likely): Nvidia extends CUDA into a global inference platform, bundling software + silicon.

Broadcom as AI Networking Monopolist (55% likely): AI data centers become bottlenecked by interconnects, and Broadcom owns the rails.

AMD as Open, Flexible Alternative (40% likely): Wins share via semi-custom, edge AI, and open ecosystems.

All three squeezed by sovereign demands & new entrants (25% likely): Governments or open-hardware coalitions disrupt the current order.

What’s striking is how different their blue oceans are: Nvidia sells an AI future, Broadcom sells the rails, and AMD sells choice. Together, they don’t just compete—they define the terrain of the AI era. The next five years will decide whether their oceans remain blue, or slowly turn red as hyperscalers, governments, and startups look for ways to break their dominance.